The Emognition dataset is dedicated to testing methods for emotion recognition (ER) from physiological responses and facial expressions. We collected data from 43 participants who watched short film clips eliciting nine discrete emotions: amusement, awe, enthusiasm, liking, surprise, anger, disgust, fear, and sadness. Three wearables were used to record physiological data: EEG, BVP (2x), HR, EDA, SKT, ACC (3x), and GYRO (2x); in parallel with the upper-body videos. After each film clip, participants completed two types of self-reports: (1) related to nine discrete emotions and (2) three affective dimensions: valence, arousal, and motivation. The obtained data facilitates various ER approaches, e.g., multimodal ER, EEG- vs. cardiovascular-based ER, discrete to dimensional representation transitions. The technical validation indicated that watching film clips elicited the targeted emotions. It also supported signals’ high quality.

cardiac output measurement • Electroencephalography • Galvanic Skin Response • Temperature • acceleration • facial expressions

photoplethysmogram • electroencephalogram (5 electrodes) • electrodermal activity measurement • Sensor • Accelerometer • Video Recording

Sample Characteristic - Organism

Sample Characteristic - Environment

The ability to recognize human emotions based on physiology and facial expressions opens up important research and application opportunities, mainly in healthcare and human-computer interaction. Continuous affect assessment can help patients suffering from affective disorders 1 and children with autism spectrum disorder 2 . On a larger scale, promoting emotional well-being is likely to increase public health, improve the quality of life, and prevent some mental problems 3,4 . Emotion recognition could also enhance interaction with robots – they would better and less obtrusively understand the user’s commands, needs, and preferences 5 . Furthermore, difficulty in video games could be adjusted to the user’s emotional feedback 6 . Recommendations for movies, music, search engine results, user interface, and content may be enriched with the user’s emotional context 7,8 .

To achieve market-ready and evidence-based solutions, the machine learning models detecting and classifying affect and emotions need improvement. Such models require a large amount of data collected from several affective outputs to train complex, data-intensive deep learning architectures. Over the last decade, several datasets on physiological responses and facial expressions to affective stimuli have been published, i.e., POPANE 9 , BIRAFFE 10 , QAMAF 11 , ASCERTAIN 12 , DECAF 13 , MAHNOB-HCI 14 , and DEAP 15 . However, these datasets have limitations such as using only dimensional scales to capture participants’ emotional state rather than asking about discrete emotions.

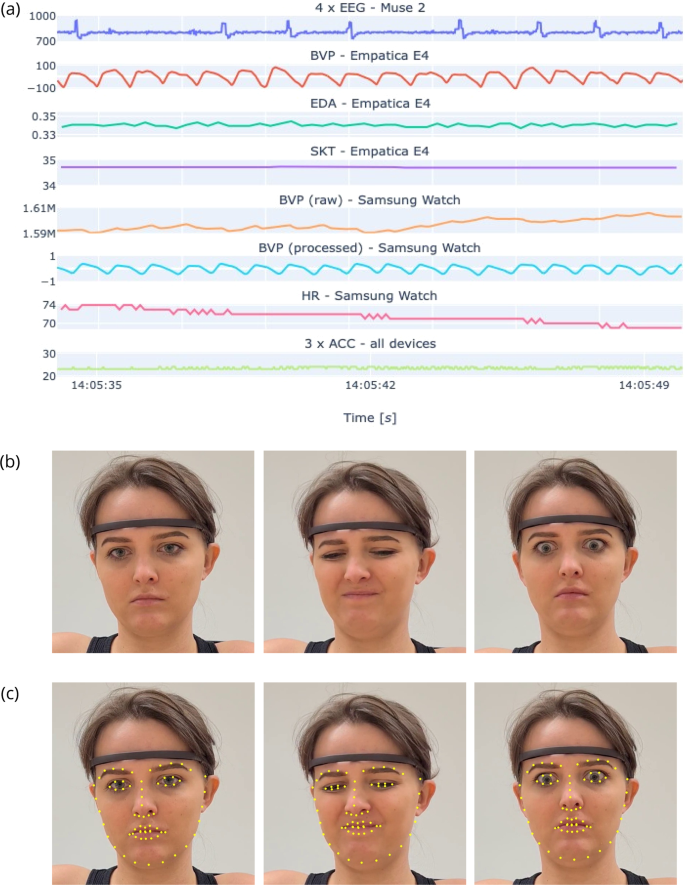

The Emognition dataset contains physiological signals and upper-body recordings of 43 participants who watched validated emotionally arousing film clips targeted at nine discrete emotions. The autonomous nervous system responses to the stimuli were recorded with consumer-grade wearables: Muse 2 equipped with electroencephalograph (EEG), accelerometer (ACC), and gyroscope (GYRO) sensors; Empatica E4 measuring and providing blood volume pulse (BVP), electrodermal activity (EDA), skin temperature (SKT), and also providing interbeat interval (IBI), and ACC data; Samsung Galaxy Watch measuring and providing BVP, and also providing heart rate (HR), peak-to-peak interval (PPI), ACC, GYRO, and rotation data. The participants reported their emotions using discrete and dimensional questionnaires. The technical validation supported that participants experienced targeted emotions and the obtained signals are of high quality.

The Emognition dataset offers the following advantages over the previous datasets: (1) the physiological signals have been recorded using wearables which can be applied unobtrusively in everyday life scenarios; (2) the emotional state has been represented with two types of emotional models, i.e., discrete and dimensional; (3) nine distinct emotions were reported; (4) we put an emphasis on the differentiation between positive emotions; thus, this is the only dataset featuring four discrete positive emotions; the differentiation is important because studies indicated that specific positive emotions might differ in their physiology 16,17,18 ; (5) the dataset enables versatile analyses within emotion recognition (ER) from physiology and facial expressions.

The Emognition dataset may serve to tackle the research questions related to: (1) multimodal approach to ER; (2) physiology-based ER vs. ER from facial expressions; (3) ER from EEG vs. ER from BVP; (4) ER with Empatica E4 vs. ER using Samsung Watch (both providing BVP signal collected in parallel); (5) classification of positive vs. negative emotions; (6) affect recognition – low vs. high arousal and valence; (7) analyses between discrete and dimensional models of emotions.

The study was approved by and performed in accordance with the guidelines and regulations of the Wroclaw Medical University, Poland; approval no. 149/2020. The submission to the Ethical Committee covered, among others, participant consent, research plans, recruitment strategy, data management procedures, and GDPR issues. Participants provided written informed consent, in which they declared that they (1) were informed about the study details, (2) understand what the research involves, (3) understand what their consent was needed for; (4) may refuse to participate in the research at any time during the research project; (5) had the opportunity to ask questions of the experimenter and receive answers to those questions. Finally, participants gave informed consent to participate in the research, agreed to be recorded during the study, and consented to the processing of their personal data to the extent necessary for the implementation of the research project, including sharing their psycho-physiological and behavioral data with other researchers.

The participants were recruited via a paid advertisement on Facebook. Seventy people responded to the advertisement. We have excluded ten non-Polish speaking volunteers. An additional 15 could not find a suitable date, and two did not show up for the scheduled study. As a result, we collected data from 43 participants (21 females) aged between 19 and 29 (M = 22.37, SD = 2.25). All participants were Polish.

The exclusion criteria were significant health problems, use of drugs and medications that might affect cardiovascular function, prior diagnosis of cardiovascular disease, hypertension, or BMI over 30 (classified as obesity). We asked participants to reschedule if they experienced an illness or a major negative life event. The participants were requested (1) not to drink alcohol and not to take psychoactive drugs 24 hours before the study; (2) to refrain from caffeine, smoking, and taking nonprescription medications for two hours before the study; (3) to avoid vigorous exercise and eating an hour before the study. Such measures were undertaken to eliminate factors that could affect cardiovascular function.

All participants provided written informed consent and received a 50 PLN (c.a., $15) online store voucher.

We used short film clips from databases with prior evidence of reliability and validity in eliciting targeted emotions 19,20,21,22,23 . The source film, selected scene, and stimulus duration are provided in Table 1.

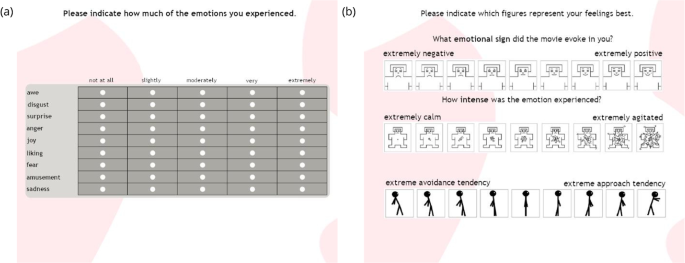

For the dimensional approach, participants reported retrospectively, using single-item rating scales, on how much valence, arousal, and motivation they experienced while watching the film clips. The 3-dimensional emotional self-report was collected with the Self-Assessment Manikin – SAM 28 . The SAM is a validated nonverbal visual assessment developed to measure affective responses. Participants reported felt emotions using a graphical scale ranging from 1 (a very sad figure) to 9 (a very happy figure) for valence, Fig. 1b; and from 1 (a calm figure) to 9 (an agitated figure) for arousal, Fig. 1b. We also asked participants to report their motivational tendency using a validated graphical scale modeled after the SAM 29 , i.e., whether they felt the urge to avoid or approach while watching the film clips, from 1 (figure leaning backward) to 9 (figure leaning forward) 30 , Fig. 1b. The English versions of the self-reports used in the study are illustrated in Fig. 1.

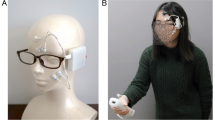

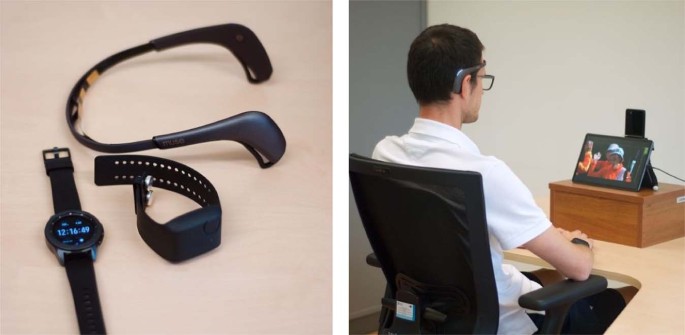

The behavioral and physiological signals were gathered using three wearable devices and a smartphone:

The Muse 2 has lower reliability than medical devices but sufficient for nonclinical trial settings 32 . It has been successfully used to observe and quantify event-related brain potentials 33 , as well as to recognize emotions 34 . The Empatica E4 has been compared with a medical electrocardiograph (ECG), and proved to be a practical and valid tool for studies on HR and heart rate variability (HRV) in stationary conditions 35 . It was also likewise effective as the Biopac MP150 in the emotion recognition task 36 . Moreover, we have used the Empatica E4 for intense emotion detection with promising results in a field study 37,38 . The Samsung Watch devices were successfully utilized (1) to track the atrial fibrillation with an ECG patch as a reference 39 , and (2) to assess the sleep quality with a medically approved actigraphy device as a baseline 40 . Moreover, Samsung Watch 3 performed well in detecting intense emotions 41 .

Additionally, a 10.4-inch tablet Samsung Galaxy Tab S6 was used to guide participants through the study. A dedicated application was developed to instruct the participants, present stimuli, collect self-assessments, as well as gather Empatica E4 signals, and synchronize them with the stimuli.

The sampling rate of the collected signals is provided in Table 2. The devices and the experimental stand are illustrated in Fig. 2.

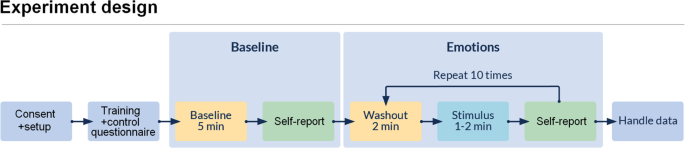

The study was conducted between the 16th of July and the 4th of August, 2020. It took place in the UX Wro Lab - a laboratory at the Wrocław University of Science and Technology. Upon arrival, participants were informed about the experimental procedure, Fig. 3. They then signed the written consent. The researcher applied the devices approximately five minutes before the experiment so that the participants could get familiar with them. It also enabled a proper skin temperature measurement. From this stage until the end of the experiment, the physiological signals were recorded. Next, participants listened to instructions about the control questionnaire and self-assessments. The participants filled out the control questionnaire about their activity before the experiment, e.g., time since the last meal or physical activity and wake-up time. Their responses are part of the dataset.

The participants were asked to avoid unnecessary actions or movements (e.g., swinging on the chair) and not to cover their faces. They were also informed that they could skip any film clip or quit the experiment at any moment. Once the procedure was clear to the participants, they were left alone in the room but could ask the researcher for help anytime. For the baseline, participants watched dots and lines on a black screen for 5 minutes (physiological baseline) and reported current emotions (emotional baseline) using discrete and dimensional measures. The main part of the experiment consisted of ten iterations of (1) a 2-minute washout clip (dots and lines), (2) the emotional film clip, and (3) two self-assessments, see Fig. 3. The order of film clips was counterbalanced using a Latin square, i.e., we randomized clips for the first participant and then shifted by one film clip for each next participant so that the first film clip was placed as the last one.

After the experiment, participants provided information about which movies they had seen before the study and other remarks about the experiment. Concluding the procedure, participants received the voucher. The whole experiment lasted about 50 minutes, depending on the time spent on the questionnaires.

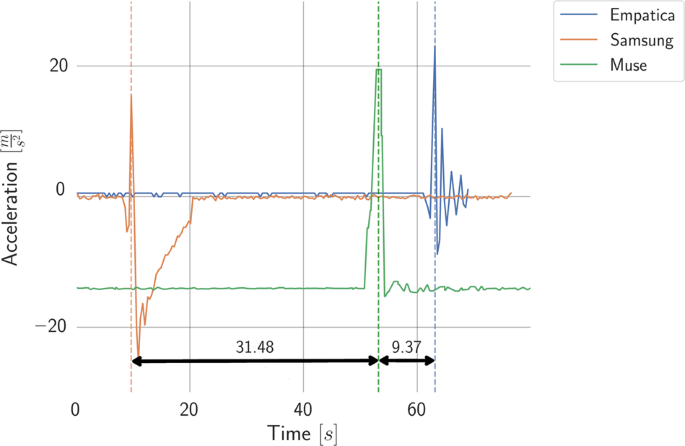

Empatica E4 was synchronized with the stimuli out-of-the-box using a custom application and Empatica E4 SDK. Samsung Watch and Muse 2 devices were synchronized using accelerometer signals. All three devices were placed on the table, which was then hit with a fist. The first peak in the ACC signal was used to find the time shift between the devices, Fig. 4. All times were synchronized to the Empatica E4 time.

Each device stored data in a different format and structure. We unified the data to JSON format and divided the experiment into segments covering washouts, film clips, and self-assessment separately. We provide the raw recordings from all used devices. Additionally, we performed further preprocessing for some devices/data and provide it alongside the raw data.

For EEG, the raw signal represents the signal filtered with a 50 Hz notch frequency filter, which is a standard procedure to remove interference caused by power lines. Besides the raw EEG, the Mind Monitor application provides the absolute band power for each channel and five standard frequency ranges (i.e., delta to gamma, see Table 2). According to the Mind Monitor documentation, these are obtained by (1) using a fast Fourier transform (FFT) to compute the power spectral density (PSD) for frequencies in each channel, (2) summing the PSDs over a frequency range, and (3) taking the logarithm of the sum, to get the result in Bels (B). The Mind Monitor documentation presents details https://mind-monitor.com.

The processing of BVP signal from the Samsung Watch PPG sensor consisted of subtracting the mean component, eight-level decomposition using Coiflet1 wavelet transform, and then reconstructing it by the inverse wavelet transform based only on the second and third levels. Amplitude fluctuations were reduced by dividing the middle value of the signal by the standard deviation of a one second long sliding window with an odd number of samples. The final step was signal normalization to the range of [−1,1].

The upper-body recordings were processed with the OpenFace toolkit 42,43,44 (version 2.2.0, default parameters) and Quantum Sense software (Research Edition 2017, Quantum CX, Poland). The OpenFace library provides facial landmark points and action units’ values, whereas Quantum Sense recognizes basic emotions (neutral, anger, disgust, happiness, sadness, surprise) and head pose.

Some parts of the signals were of lower quality due to the participants’ movement or improper mounting. For example, the quality of EEG signal can be investigated using Horse Shoe Indicator (HSI) values provided by the device, which represent how well the electrodes fit the participant’s head. For video clips, OpenFace provides information about detected faces with their head pose per one frame. We have not removed low-quality signals so that users of the dataset can decide how to deal with them. Any data-related problems that we identified are included in the data_completeness.csv file.

Collected data (physiological signals, upper-body recordings, self-reports, and control questionnaires) are available at Harvard Dataverse Repository 45 . The types of data available in the Emognition dataset are illustrated in Fig. 5. The upper-body recordings in an MP4 format, full HD resolution (1920 × 1080) constitute 76GB of space. The other data is compressed into the study_data.zip package of size 1GB (16GB after decompression). The data are grouped by participants. Each participant has their folder containing files from all experimental stages (stimulus presentation, washout, self-assessment) and all devices (Muse 2, Empatica E4, Samsung Watch). In total, each participant has 97 files related to:

Additionally, facial annotations are provided in two ZIP packages, OpenFace.zip and Quantum.zip, respectively. The OpenFace package contains facial landmark points and action units’ values (7.4GB compressed, 25GB after decompression). The Quantum Sense package contains values of six basic emotions and head position (0.7GB compressed, 4.7GB after decompression). The values are assigned per video frame.

The files are in JSON format, except OpenFace annotations in CSV format. More technical information (e.g., file naming conventions, variables available in each file) is provided in the README.txt file included in the dataset.

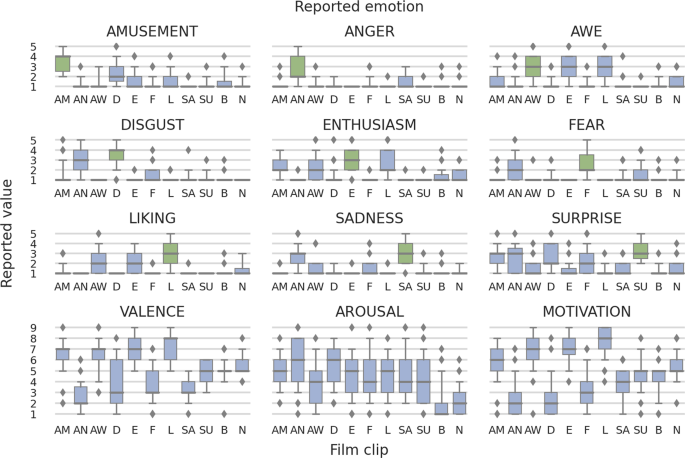

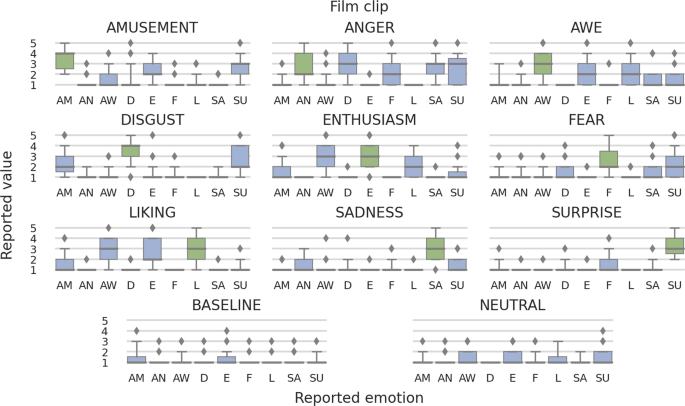

To test whether film clips elicit targeted emotions, we used repeated-measures analysis of variance (rmANOVA) with Greenhouse-Geisser correction and calculated recommended effect sizes of \(_

^\) for ANOVA tests 46,47 . To examine differences between the conditions (e.g., whether self-reported amusement in response to the amusing film clips was higher than it was reported in response to the other film clips), we calculated pairwise comparisons with Bonferroni correction of p-values for multiple comparisons.

As summarized in Tables 3, 4, Figs. 6 and 7, watching film clips evoked the targeted emotions. The differences in self-reported emotions in film clips should be interpreted as large 48 . Pairwise comparisons indicated that self-reported targeted emotions were the highest in the corresponding film clip condition (e.g., self-reported amusement in response to the amusing film clip). Furthermore, we observed that some emotions were intense in more than one film clip condition and some film clips elicited more than one emotion. These are frequent effects during emotion elicitation procedures 22,29,30 , see Supp. Mat. for details.

To validate the quality of the recorded physiological signals, we computed signal-to-noise ratios (SNRs) by fitting the second-order polynomial to the data obtained from the autocorrelation function. It was done separately for all physiological recordings (all participants, baselines, film clips, and experimental stages, see Sec. Data Records). SNR statistics indicated the signals’ high quality. Mean SNR ranged from 26.66 dB to 37.74 dB, with standard deviations from 2.27 dB to 11.13 dB. For one signal, the minimum SNR was 0.88 dB. However, 99.7% of its recordings had SNR values over 5.15 dB. As the experiments were conducted in a sitting position, we did not analyze signals from accelerometers and gyroscopes. For details, see Supp. Mat. Table 3.

Additionally, the Quantum Sense annotations were analyzed to see how well the software recognized emotions. In general, it performed well within conditions, but poorly between conditions. The main reason behind wrong or missing annotations were participants covering face with palm or leaning towards camera. In some cases, participants already seen the movie and react differently – smiled instead of being disguised. For details see Supp. Mat. Sec. Analysis of Quantum Sense Results.

The most common approach to emotion recognition from physiological signals includes (1) data collection and cleaning; (2) signal preprocessing, synchronization, and integration; (3) feature extraction and selection; and (4) machine learning model training and validation. A comprehensive overview of all these stages can be found in our review on emotion recognition using wearables 49 .

For further processing of the Emognition dataset, we recommend the following Python libraries, which we analyzed and found useful for feature extraction from physiological data. The pyPhysio library 50 (https://github.com/MPBA/pyphysio) facilitates the analysis of ECG, BVP, and EDA signals by providing algorithms for filtering, segmentation, extracting derivatives, and other signal processing. The BioSPPy library (https://biosppy.readthedocs.io/) handles BVP, ECG, EDA, EEG, EMG, and respiration signals. For example, it filters BVP, performs R-peak detection, and computes the instantaneous HR. The Ledalab library 51 focuses on the EDA signal and offers both continuous and discrete decomposition analyses. The Kubios software (https://www.kubios.com) enables data import from several HR, ECG, and PPG monitors, and calculates over 40 features from the HRV signal. The PyEEG library (https://github.com/forrestbao/pyeeg) is intended for EEG signal analysis and processing, but it works with any time series data.

Emotion recognition from facial expressions can be achieved by processing video images 52 . At first, the face has to be detected, and landmarks (distinctive points in facial regions) need to be identified. Tracing the position of landmarks between video frames allows us to measure muscle activity and encode it into Action Units (AUs). AUs can be used to identify emotions as proposed by Ekman and Friesen in their Facial Action Coding System (FACS) 53 .

The use of the Emognition dataset is limited to academic research purposes only due to the consent given by the participants. The data will be made available after completing the End User License Agreement (EULA). The EULA is located in the dataset repository. It should be signed and emailed to the Emognition Group at mailto:emotions@pwr.edu.pl. The mail has to be sent from an academic email address associated with the Harvard Dataverse platform account.

The code used for the technical validation is publicly available at https://github.com/Emognition/Emognition-wearable-dataset-2020. The code was developed in Python 3.7. The repository contains several Jupyter Notebooks with data manipulations and visualizations. All required packages are listed in requirements.txt file. The repository may be used as a starting point for further data analyses. It allows you to easily load and preview the Emognition dataset.

The authors would like to thank the Emognition research group members who contributed to data collection: Anna Dutkowiak, Adam Dziadek, Weronika Michalska, Michał Ujma; also to Grzegorz Suszka for his help in processing facial recordings. The authors would also like to thank Prof. Janusz Sobecki for making the UX Wro Lab available for the experiment. This work was partially supported by the National Science Centre, Poland, project no. 2020/37/B/ST6/03806, and 2020/39/B/HS6/00685; by the statutory funds of the Department of Artificial Intelligence, Wroclaw University of Science and Technology; by the Polish Ministry of Education and Science – the CLARIN-PL Project.